AI is no longer a buzzword. It's already transforming how Reward teams work – from analysing data, to designing level frameworks, to drafting employee comms.

But with so much change so quickly, it's easy to feel behind. We know we need AI in our processes, but where do we even start? Which tools actually matter for Reward leaders? The possibilities feel endless.

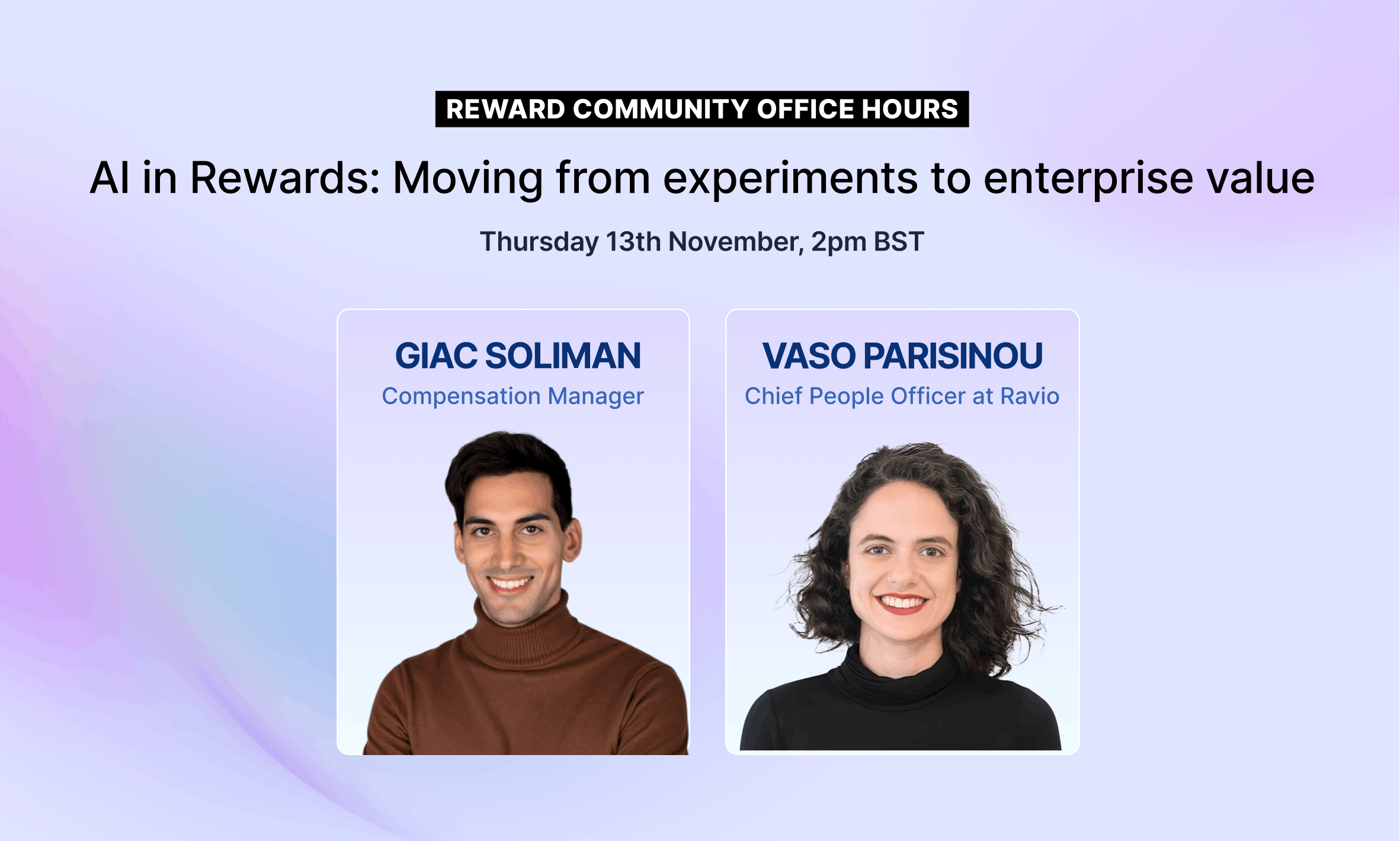

In this month's Reward Community Office Hour, Giac Soliman joined Ravio’s Chief People Officer, Vaso Parisinou, to discuss how AI can be practically applied in Rewards, and what skills Reward professionals need to build (or buy) as AI adoption increases.

The discussion included:

- The skills Reward teams need to develop for the AI era

- Current practical use cases for applying AI in Rewards

- Adopting AI responsibly, with governance and risk management in mind.

Key takeaways from the webinar

If you're more of a reader than a watcher, here's a few of the most interesting insights from Vaso and Giac's discussion on AI in Reward.

Key takeaway 1: Develop a strong skeptic muscle before adopting AI Reward tools

People often over-trust AI vendor claims about automating complex compensation processes. The real skill is not mastering AI coding but becoming a skeptic operator who can question outputs, identify flaws, and understand where automation makes sense – and where it doesn’t.

A second essential mindset is continuous learning. AI evolves rapidly, and comfort comes through curiosity, not formal training. By experimenting with AI in small, low-stakes, personal contexts, Reward professionals can build intuition about how it behaves before applying it to critical business areas.

Key takeaway 2: Human judgment is the new bottleneck in the age of AI in Total Rewards

AI now automates data analysis and reporting, shifting the real challenge to human judgment – knowing how to balance data patterns, analytics, and business context when making decisions.

Teams that demonstrate sound judgment about where AI adds value (and what risks need managing) are becoming indispensable across HR.

💡 Practical application: Keep a “judgment log” of difficult Reward decisions and your reasoning. Create prompts that challenge your assumptions rather than simply confirming them.

Key takeaway 3: Giac's five step framework for responsible AI adoption in Total Rewards

Responsible AI adoption requires structure, discipline, and an emphasis on safety before scale.

Giac outlined a five step framework for Reward teams to follow:

- Set principles first: Establish clear boundaries – humans own decisions, AI assists. Every use case should align with pay philosophy, compliance, and privacy standards.

- Start small: Begin with simple, high-volume tasks like summarising survey data or generating job descriptions, not high-stakes compensation decisions.

- Define inputs and outputs: Be explicit about what data AI can and cannot access. Poor or incomplete inputs lead to amplified errors.

- Add checkpoints: Human review remains non-negotiable. Reward partners must validate outputs, check for bias, and flag anything outside of policy boundaries.

- Learn and scale: After each use, review where AI added value and where humans intervened. Refine prompts, strengthen governance, and expand carefully.

This approach encourages teams to focus on understanding AI’s behaviour before relying on it, ensuring that ethical and operational safeguards evolve alongside capability.

Key takeaway 4: Master data security and governance before experimentation

Strong partnerships with IT and Legal are fundamental to safe AI adoption. Using personal AI accounts for reward data can expose sensitive information and breach privacy laws. Corporate AI licenses, data governance, and audit trails are critical safeguards.

As Giac put it: “Data security and governance may not be exciting, but they are absolutely essential.”

January Reward Hours: Dive into Fintech Compensation – coming soon 👀